Calendly’s Experimentation Evolution

From basic feature flags to a dedicated growth organization.

Table of contents

From Calendly’s founding, the growth story was simple: share your booking link → get a meeting booked → delight( and earn!) a new user → repeat. No paid growth machine. No elaborate funnels. Just a great product that spread effortlessly because people experienced value within seconds.

That viral loop was our earliest form of growth long before we had a formal growth team. But as usage scaled and our product portfolio expanded, we reached a point where organic growth alone wasn’t enough. We needed a more systematic way to understand which user experiences were helping customers and which were getting in their way—both qualitatively and quantitatively—to ensure they get maximum value from our products. This set the stage for our journey into structured experimentation.

We can break the evolution of experimentation at Calendly into three phases:

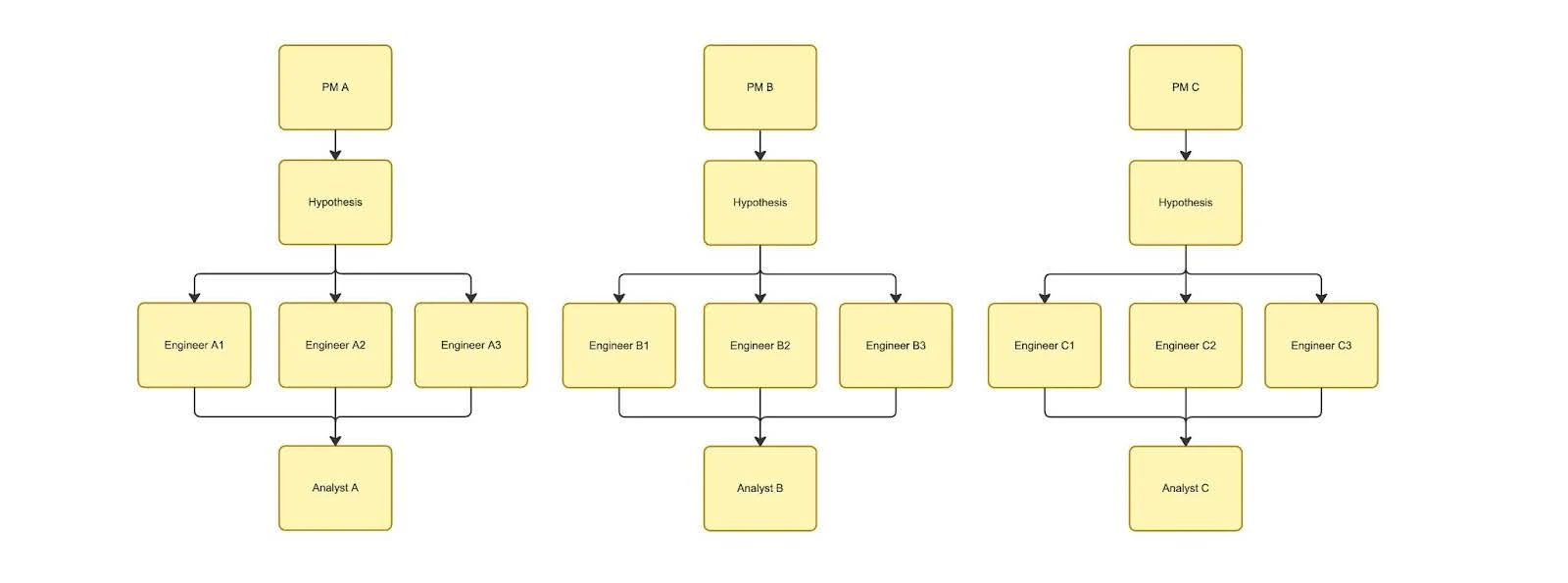

Where we were: isolated experimentation

Where we are: a dedicated growth organization

Where we’re headed: scaling experimentation everywhere

Where we were: Isolated Experimentation

In 2024, Calendly had big ambitions of new product lines, and major optimizations. Powering all it was a team of incredibly talented people, including an activation squad running experiments. But if we’re honest, what we didn’t have yet, but needed, was structure.

Calendly has been using feature flags and lightweight experimentation for almost a decade. It’s a pattern familiar to many SaaS companies where internal users dogfood changes, incrementally roll out those changes, and keep an eye out for errors and user feedback. Ultimately there would be a dashboard that a few people monitored which allowed us to learn and improve slowly and iteratively over time.

This approach works well for safely shipping new features. But the approach meant experimentation was mostly isolated to the teams that cared about a specific surface. The result? Learnings stayed local, metrics varied from team to team, and we weren’t compounding what we were discovering. Nothing was broken but we weren’t learning as effectively as we could either.

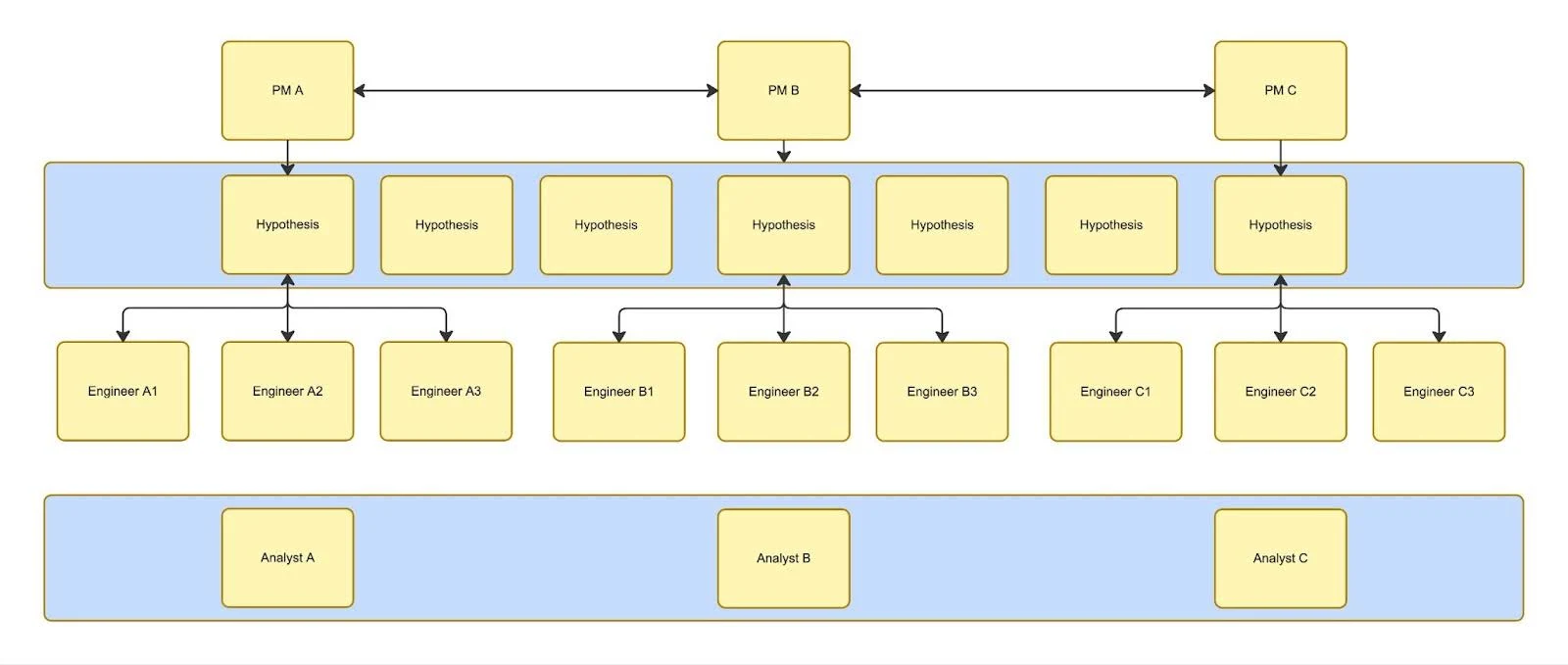

Where we are: Dedicated growth organization

Fast forward to 2025 where, we’ve built a dedicated growth organization made up of subject matter experts from across product, marketing, design, analytics, and engineering. Their goal is simple: accelerate learning and scale our impact across the business.

Instead of centralizing every decision under “growth,” we focused on building systems that make experimentation reliable and repeatable for everyone. In doing so, we realized quickly that frequent manual intervention required by one of our data analysts was causing a bottleneck. A growth org can’t operate at scale if only analysts have access to the right metrics. We invested heavily in democratizing clean, consistent data.

Metrics Catalog (“Data Packs”)

We created pre-defined metric bundles for key parts of the product experience. Think of traditional growth areas like onboarding, activation, retention, monetization as unity metrics such as team adoption rate or shared feature usage. These distributed data packs eliminate confusion and keep everyone aligned on what we’re measuring. As time goes on, and we learn about more valuable insights from this data, we can easily expand this product set.

Guardrail Metrics

Every experiment includes both the metrics we want to move as well as the metrics we absolutely can’t afford to hurt. These are the KPIs that we lean on to ensure we protect the health of our business as we grow.. For example, a first-month subscription discount might improve initial conversion, but if users downgrade immediately after the discount window, that’s not a meaningful win. Guardrails protect us from these traps. We find that our guardrail metrics are often repurposed as the primary metric for other experiments, which allows us to calculate once but benefit several times.

Consistent Unique IDs Across Platforms

We standardized unique, stable identifiers so we can measure a person’s behavior before signup across web, mobile, and app surfaces without needing to know the person’s identity. It sounds small, but it’s foundational for top-of-funnel experimentation. With third party tooling coming and going so often, it became evident that we needed one canonical ID that we could rely on over time and own with confidence, and marry up sessions to known users while respecting their privacy settings.

Holdout Groups

Shipping a winning experiment doesn’t mean we stop measuring it. We maintain long-running holdouts for key experiences so we can monitor long-term health and avoid novelty effects. Holdouts help us see if the win holds over time. It can be tempting to roll out an experience to 100% of users based on strong early, statistically significant results, but those numbers can reverse over time! Even if results don’t turn negative, it’s important to understand whether user behavior stabilizes or regresses over time. Some changes simply need more time before their full impact becomes clear. This is especially true when they affect less common user journeys or downstream metrics that require months of data to evaluate confidently. At the end of the day, holdouts also deliver a data-backed performance outcome for growth orgs as well — one that will determine if our experiments ultimately improved the business. This discipline is one of the most important parts of a mature experimentation practice, and it’s what keeps us sane as we scale to hundreds of experiments.

Formal Experiment Readouts

We introduced a more structured, consistent readout cadence across growth as a new ritual. These aren’t “status updates.” They’re intentional opportunities to improve our craft. During these sessions, we highlight what made an experiment strong, share surprising outcomes, and critique the setup and tracking. We find that this functional cross-pollination is a fantastic way to bring brilliant folks with vast different views of our products and customer perspectives together. As our products serve an incredibly multilingual, multinational audience, we need the kind of broad perspective that allows us that full-circle view, and becomes the fuel for the next wave of ideas. Because ultimately, we’re trying to build shared judgment, not just share dashboards

Growth MVP Awards: Celebrating the Right Behaviors

Growth can involve a lot of invisible work: fixing instrumentation, chasing down metrics issues, cleaning up flags, or iterating on “failed” tests that unlock bigger wins later. We introduced Growth MVP awards to recognize our people who go above and beyond, operate in a way that aligns with our company values and deliver meaningful impact. Our goal is to reinforce the curiosity, collaboration, and craftsmanship we want to scale.

Rapid Ideation → Execution → Follow-Up

One of the clearest signs of our maturity is how quickly we move now. We’re running follow-up tests within days when we spot opportunities to improve. Failed experiments have become fast retries rather than dead ends. We’ve also evolved how we scope implementations so they’re impactful, yet narrow and flexible enough that if we want to learn more than one thing, we run the experiment with a single change in mind while still having the ability to launch another test without any new code. We are now in a place where wins get iterated on immediately, before momentum cools. Whether an experiment succeeds or not, the learnings translate directly into next steps while the topic is still fresh. We’re not just running tests, we’re running an ongoing evolution of experiments driven by a hypothesis.

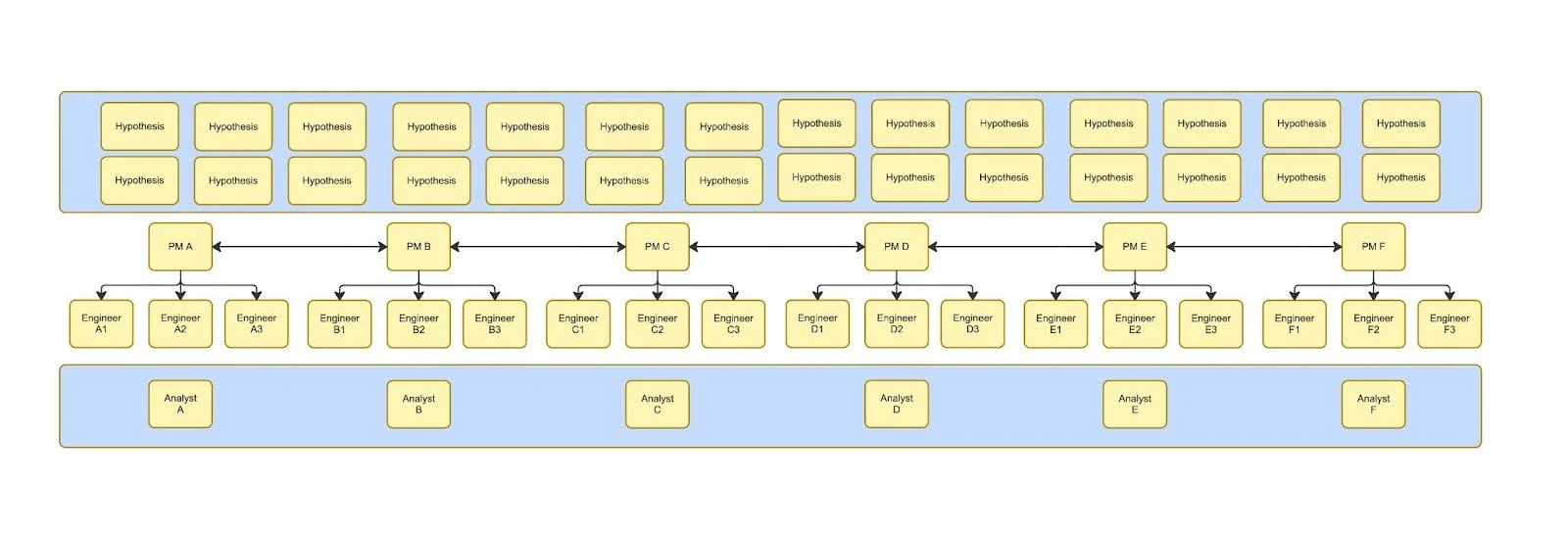

Where we are headed: scaling experimentation everywhere

The next chapter of growth at Calendly means making experimentation a first-class capability across the entire company.

AI is accelerating how quickly our teams can ideate, build, and ship. But speed only matters if we’re delivering real value. Experimentation is how we validate that value at scale, ensuring that qualitatively and quantitatively we’re building for the better. Here’s where we’re going next.

Scaling to More Teams

We want more teams across Calendly to be able to run high-quality experiments without reinventing the process. To do that, we must start with standardization. Right now, teams need to invest time to understand our tool stack and how to properly set up an experiment for success. This means making our experimentation tooling easier to use, and removing complexity or overhead where it isn’t needed. We need to protect the hygiene of experimentation ensuring that pass/fail evaluations happen in the right place, and that core steps are consistent. These are details we should not have to relearn across the company. To ensure teams are supported, we will embed growth experts where it makes sense. Intricate details like measuring the exact moment of exposure are small but critical parts of running an accurate experiment. We want to ensure that new teams are set up for success with low risk. This also helps build a culture where learning is the default and teams are tuned to iteratively build and test their way toward delivering valuable products for our customers.

Improving Tooling and Conventions

We’re investing heavily in making experimentation cleaner and easier to maintain. After nearly a decade of running experiments, we have some debt to address. We want to remove stale feature gates and flags from our internal and external systems, align on naming conventions, automate detection of old flags for auditing, improve experiment metadata, and unify tracking setups. We have the opportunity to remove a lot of manual and error prone work that happens today and only works smoothly because a centralized group handles it on repeat. We’re raising the bar so teams don’t have to think about the plumbing. The more boring and predictable the system is, the better the experiments get. This could mean entering experiment information in one place and then kicking off a process that configures the experiment, automatically sets up dashboards, and connects to the communication tools we use to keep teams informed. This type of standardization reduces human error, creates consistency, and makes the system more predictable.

Experiment Scorecards

We’re building scorecards to track experiment quality, iteration speed, and our cleanup pace. We want to track the entire lifecycle of an experiment and avoid leaving any dead code paths in our system. Leaving dead code leads to risky quality assurance, security flaws, slower load times, additional cognitive load for engineers, and overall is considered poor practice. We will design the scorecards so we can also measure the impact across the entire org. Think of growth loops, and compounding growth loops, where improving something like virality can amplify an entirely different team's onboarding flow We want to better understand the impact and blast radius of our work to inform ourselves and partner teams. We also need to address where experiments go wrong! This includes counts and details on common breakdowns or blockers that prevented the experiment from being healthy. This helps us see patterns and improve the system faster.

Agentic Workflows (“Write Once, Read Many”)

We’re leaning heavily into automation and AI-assisted workflows. These systems will auto-generate experiment scaffolds and set up monitoring automatically. They can help evaluate statistical significance or send rollout recommendations into the tools where our team spends their time so we act faster without having to remember to check performance. We will also implement automation that produces experiment summaries either on demand or after completion, or both. These tools will notify teams of health issues. Statsig provides some alerts out of the box, although they do not include the internal product context that often requires institutional knowledge today. Our goal is to increase throughput without sacrificing quality.

Conclusion

As we close out the year, we are already looking forward to how we 5 to 10x the number of experiments that we run across the company. This year has been incredibly rewarding. We have learned quickly, adapted to the changing trends in software, and increased our experiment throughput. These changes have helped our teams grow professionally and have improved the quality of our software and outcomes for customers.

Appreciation

Thank you to our Data Platform and Analytics groups for helping set us up for success in our migration of feature flags to a more stable and feature rich Statsig product.

None of this progress happens in a vacuum, and I want to call out the teams who made our evolution possible. Growth only works when the rest of the organization believes in the mission, and we’ve been incredibly lucky on that front. Huge thanks to our partners across Engineering, Product, Marketing, and our Analytics group for their collaboration, patience, and willingness to iterate.. They’ve supported every experiment, every instrumentation request, every follow-up, and every random “does this metric look right?” message and they’ve done so with passion.

I also want to thank our leadership team for giving us the opportunity, space, and investment to build a real experimentation practice at Calendly. Their trust in the process is what allows growth to actually do its job.

And finally, a massive shoutout to Statsig. Their team guided us through a complex migration, met our dynamic needs, and consistently went above and beyond to make sure we have a platform we can scale on. Their support has been nothing short of exceptional, and it’s played a huge role in helping us evolve our experimentation engine.

Related Articles

Don't leave your prospects, customers, and candidates waiting

Calendly eliminates the scheduling back and forth and helps you hit goals faster. Get started in seconds.