Beating the Clock: Predictive Horizontal Pod AutoScaler (HPA) with Datadog Analytics

Table of contents

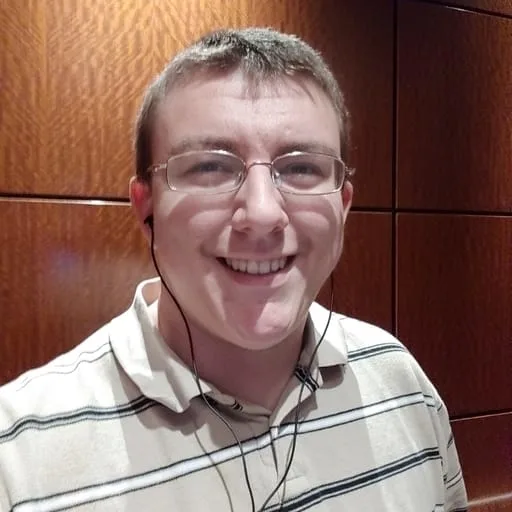

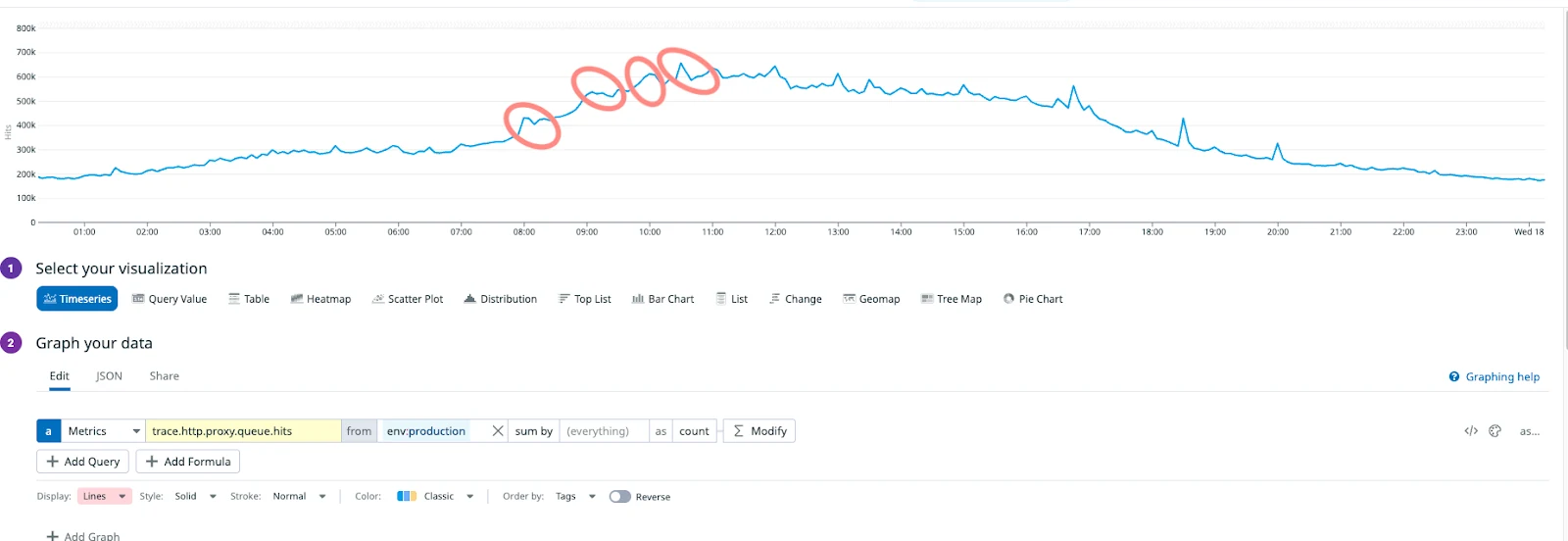

At predictable intervals — on the hour and on the half-hour — users arrive at the Calendly application. They hit "schedule," "book," and "confirm," sending a flurry of emails to invitees and spiking traffic suddenly. For large applications like Calendly, application startup isn’t instantaneous; therefore, while the application is scaling up, users suffer from a performance penalty. Even if the system remains technically ‘healthy’, these traffic surges predictably lead to increased latency and a degraded user experience.

Our Horizontal Pod Autoscaler (HPA) functioned precisely as intended, scaling resources in direct response to traffic load. However, there’s some inherent latency in even the HPA’s reaction time. However small, it still proves costly during peak demand periods. While the scaling signal was accurate, it consistently lagged behind real-time need, causing web threads to wait multiple seconds for available servers at critical moments.

This post presents a solution that uses predictive autoscaling, based on historical traffic data, to eliminate latency during predictable demand spikes—without extra tools or manual scheduling. By time-shifting historical data and applying smoothing techniques, we provide the HPA with advance notice of anticipated load changes. This is accomplished entirely within the autoscaling system itself, eliminating the need for cronjobs, synthetic traffic generation, or manual scaling interventions. For systems with repeatable load patterns and slow startup times, this simple technique can drastically improve reliability and user experience.

Background

Our application uses Puma, and we have relatively long pod boot times. When user traffic increases suddenly, new pods don’t come online fast enough to absorb the load. The result is temporary queueing at the application layer, which leads to higher request latency and occasional user-visible latency. The HPA monitors Puma saturation based on the following formula:

(puma.max_threads - puma.pool_capacity) / puma.max_threads

While this gave us a clear saturation ratio and autoscaling threshold, we discovered a fundamental limitation: reactive scaling only triggers after problems have already started. When traffic spiked sharply and without advance notice, our saturation threshold got crossed after the spike was already underway. The effect was such that users were already experiencing slowdowns before our HPA even reacted—let alone being able to queue up new pods to handle the load. We needed a way to anticipate these spikes in advance and to shift from reactive to predictive scaling that could spin up increased capacity before saturation occurred.

Leveraging Week-over-Week Signals

Searching for a solution, we noticed that our traffic patterns tend to be consistent week-over-week. And, the same spikes generally happen at the same times of day: 12:00, 12:30, 1:00, etc. Rather than fabricate a predictive metric, we decided to use our own historical behavior as a leading indicator.

Datadog, the application performance monitoring tool, allowed us to apply a timeshift() function to metric queries. We configured a new query to retrieve the saturation signal from one week ago, plus five minutes—enough of a buffer to allow pre-scaling, but not so early that we’d be scaling unnecessarily far in advance.

To further stabilize the signal, we applied a 3-point exponential weighted moving average (ewma_3), which helped prevent transient noise from an unusual burst triggering a scale event.

HPA Integration

Kubernetes supports multiple external metrics in HPA configuration. When multiple scaleOn entries are defined, the HPA will evaluate all of them and scale to the highest recommended replica count. This means we can keep our original, real-time metric and add the predictive one without interfering. Here is the Datadog query we used for the predictive metric:

query: "ewma_3(timeshift(((avg:puma.max_threads - avg:puma.pool_capacity) / avg:puma.max_threads) * 100, -604500))"

# Uses last week's puma saturation (+5min) with EWMA to predictably pre-scale

This HPA modification is entirely declarative and fully compatible with our existing GitOps workflows.

Runtime Behavior

Let’s say traffic spiked at 12:30 PM last Tuesday. This Tuesday, at 12:25 PM, the time-shifted metric begins to increase. Because the HPA is watching both metrics, it sees the predictive signal first and begins to scale pods. When 12:30 hits, the real-time metric hits confirming the spike and keeping the system stable. Once load decreases, both metrics drop below the threshold, and the system scales back down. In practice, this gives us a five-minute window to absorb load early without introducing artificial mechanisms or manual scheduling..

On Signal Dampening and Feedback

A fair concern raised during review was whether successful pre-scaling might unintentionally weaken its own signal. If saturation is lower this week due to an effective early scale-up, might next week’s predictive metric — based on that dampened signal — not trigger in time, leading to missed spikes?

Yes, occasionally. But this is a conscious tradeoff.

We’d rather miss a spike occasionally versus over-provision constantly. The time-shifted metric doesn’t vanish when we pre-scale, it simply softens slightly with each successful scale. For example, a spike that once drove Puma saturation to 40% might only reach 35% the following week. That’s still a signal, just a less urgent one.

So long as a saturation metric is above threshold, pre-scaling will trigger. If not, we save on compute without compromising normal HPA behavior. And if we do miss a spike, the system can still scale as it always has, and the resulting higher saturation re-seeds the signal for the following week.

In effect, we’ve introduced a feedback loop. It damps sharp spikes over time without inflating baseline usage. The result is a smoother, more stable scaling pattern that favors efficiency without sacrificing responsiveness.

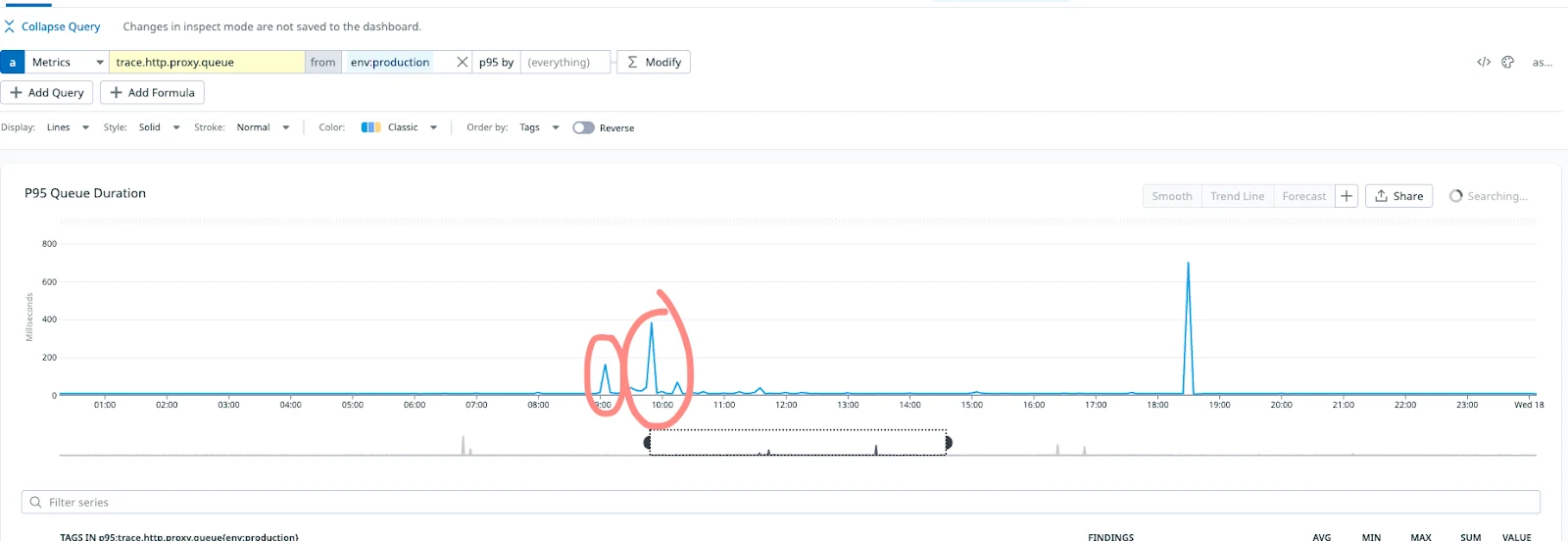

Conclusion

After deploying this configuration, we observed early scale-ups ahead of high-traffic windows exactly as intended. Queuing dropped, startup penalties decreased, and no special orchestration was required. The solution continues to evolve, but even in its initial form, it has outperformed previous attempts. We now see scaling starting before our peak traffic events, lower queue times, and higher apdex.

For workloads with predictable traffic patterns and slow start times, this method offers a clean, low-maintenance way to pre-scale based on real, historical data. It requires no custom scheduling, no synthetic metrics, and no new tooling. Just one line of YAML, and a deeper understanding of your own traffic.

Appreciations

We’d like to thank our colleagues across Calendly Engineering for their continued dedication to performance and reliability. The improvements described here were made possible by their deep understanding of our systems and their commitment to investigating even subtle signs of abnormal behavior. Because of their diligence, our users now enjoy a smoother experience during peak traffic periods.

Next Steps

Calendly is a high-traffic platform that must constantly evolve to maintain performance and reliability at scale. Looking ahead, we’ll continue investing in both infrastructure and application-level improvements ranging from product flow optimizations to further innovations in autoscaling and observability. Each of these efforts shares the same goal: delivering the fastest, most dependable scheduling experience possible for our users.

Related Articles

Don't leave your prospects, customers, and candidates waiting

Calendly eliminates the scheduling back and forth and helps you hit goals faster. Get started in seconds.